BUILD YOUR OWN

RESOURCE LIBRARY

Special Reports

SusHi Tech Tokyo 2024: experience ‘Tokyo 2050’ todaySponsored by The SusHi Tech Tokyo 2024 Showcase Program Executive Committee

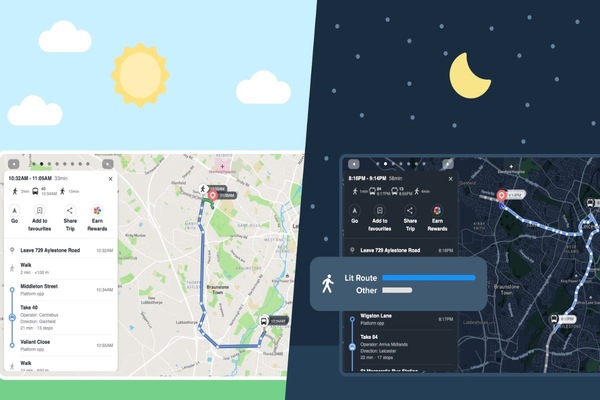

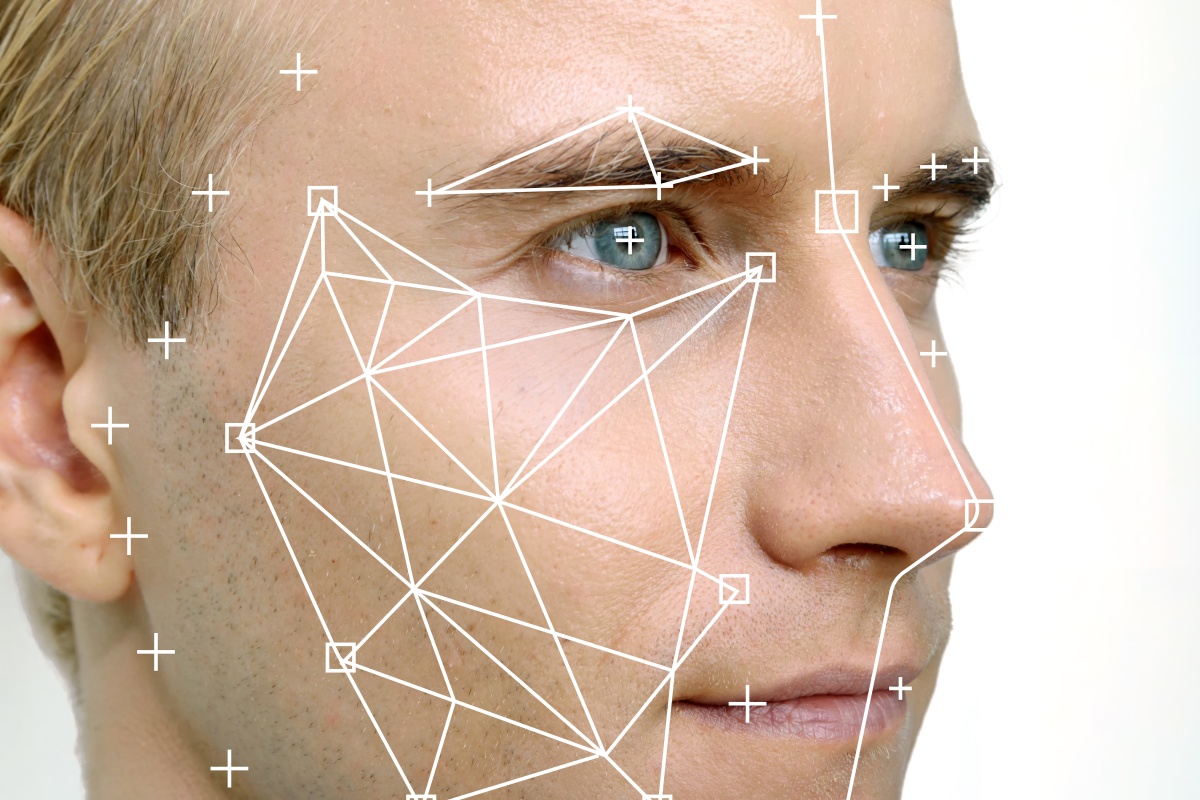

Ethics panel sets out future guidelines for using facial recognition software

Software checks people passing a camera in a public place against an image database

Oh no, sadly you have viewed the maximum number of articles before we ask you to complete some basic details. Don't worry, it's free to register and won't take you longer than 60 seconds!

Latest City Profile

SmartCitiesWorld Newsletters (Daily/Weekly)

BECOME A MEMBER

We use cookies so we can provide you with the best online experience. By continuing to browse this site you are agreeing to our use of cookies. Click on the banner to find out more.